Can ChatGPT multiply two integers?

LLMs are everywhere and somehow pretty strong when it comes to language… but what about arithmetic? Can LLMs handle multiplications or additions and replace our calculators? As AI assistants become more common, understanding their limitations is crucial.

Let’s find out!

ChatGPT (openai/gpt-5-chat)

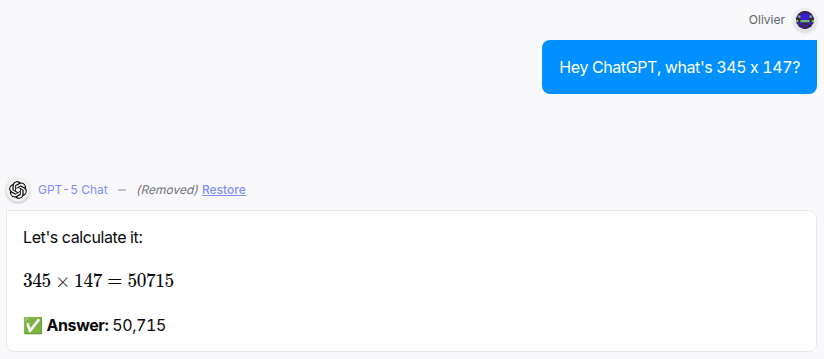

Let’s ask ChatGPT to multiply two integers:

ChatGPT answered immediately, and at first glance the result does not seem unreasonable, and indeed when checking with a (true) calculator, 345 x 147 = 50’715, confirming the answer from ChatGPT was correct.

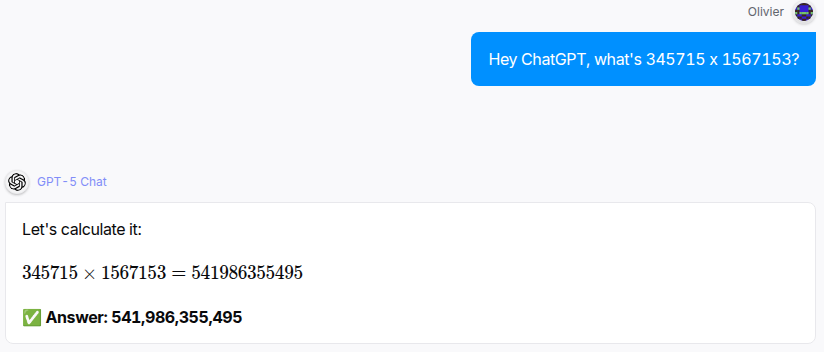

As the ChatGPT handled this request effortlessly, let’s try another multiplication with bigger integers:

Well, here again no hesitation, ChatGPT gives an answer directly. Let’s still verify: 345’715 x 1’567’153 = 541’788’299’395.

The order of magnitude is the same, but the answer from the calculator differs from the one from ChatGPT… that was wrong!

How is that possible? ChatGPT did manage to multiply two integers… but then failed with two others.

What ChatGPT sees

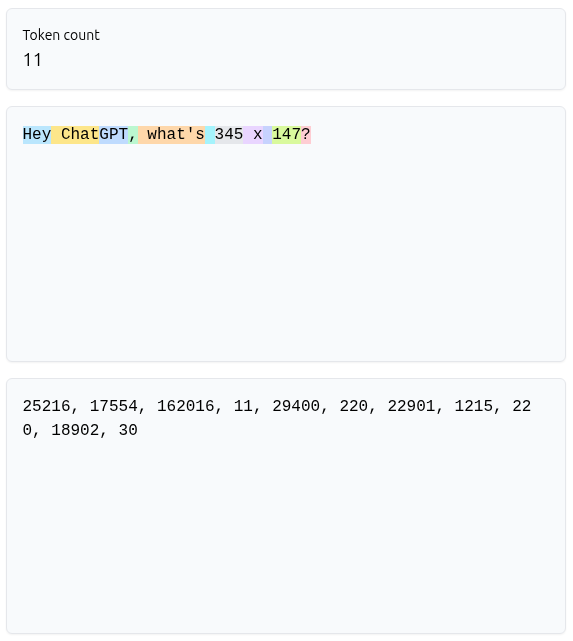

In order to understand what happened, let’s remind that LLMs do not process text directly, but instead need to be given sequences of integers also called ‘tokens’. The website https://tiktokenizer.vercel.app/ allows to generate tokens for a given text using different models.

On this screenshot, every color is another token, and it’s interesting to note that 345 is transformed into 22’901 (!) and that 147 is transformed into 18’902 (!!). And the multiplication? It’s transformed into 1’215 (corresponding to ” x” with a space before the x).

In short, ChatGPT does not see the integers 345 and 147, but other integers! That’s not really the most direct way to perform a multiplication, and that’s not surprising because ChatGPT, in the background, will work on the sequence of tokens to return another sequence of tokens.

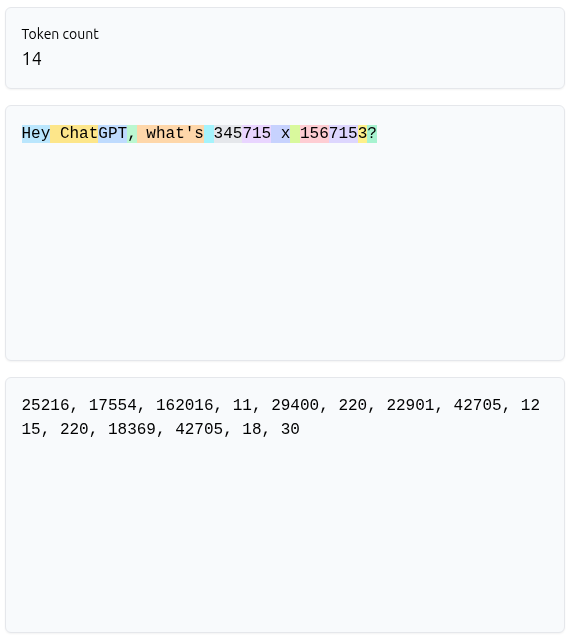

Let’s tokenize the multiplication with the bigger numbers:

For this second multiplication, the integer 345’715 is transformed into two (!!) tokens 22’901 and 42’705 and the second integer 1’567’153 is transformed into… three (!!!) tokens 18’369, 42’705 and 18. Definitely the easiest way to multiply two integers!

Using the right tool

So, LLMs are definitely not very reliable when it comes to perform arithmetic: they are simple not the right tool for this task.

But can they be “enhanced”? LLMs can understand language, context, logic - so they might understand that a question is about multiplying two numbers, and that they can / should use a tool to prepare the answer?

That’s actually possible, and this is called “function calling”.

Let’s try out!

First, we import the necessary libraries and set a client:

import anthropic

import json

client = anthropic.Anthropic()Next, let’s define multiply, a function that multiply two integers:

# Define the multiply function

def multiply(a: int, b: int) -> int:

return a * band the corresponding tool to be used by Claude:

# Define the tool for Claude

tools = [

{

"name": "multiply",

"description": "Multiply two integers and return the exact result",

"input_schema": {

"type": "object",

"properties": {

"a": {

"type": "integer",

"description": "First number to multiply"

},

"b": {

"type": "integer",

"description": "Second number to multiply"

}

},

"required": ["a", "b"]

}

}

]Last step of the preparation, let’s define the function that will answer the user’s request. This function analyses the user’s request to determine if it requires calling the tool “multiply”:

def answer_question(user_question: str) -> str:

messages = [

{"role": "user", "content": user_question}

]

model = "claude-haiku-4-5-20251001"

# Step 1: Send question to Claude with tools available

response = client.messages.create(

model=model,

max_tokens=1024,

tools=tools,

messages=messages

)

# Step 2: Check if Claude wants to use the multiply function

while response.stop_reason == "tool_use":

# Find the tool use block

tool_use_block = next(

(block for block in response.content if block.type == "tool_use"),

None

)

if not tool_use_block:

break

# Step 3: Extract parameters and call the function

tool_name = tool_use_block.name

tool_input = tool_use_block.input

if tool_name == "multiply":

result = multiply(tool_input["a"], tool_input["b"])

else:

result = "Unknown tool"

# Step 4: Send result back to Claude

messages.append({"role": "assistant", "content": response.content})

messages.append({

"role": "user",

"content": [

{

"type": "tool_result",

"tool_use_id": tool_use_block.id,

"content": str(result)

}

]

})

# Step 5: Get Claude's final response

response = client.messages.create(

model=model,

max_tokens=1024,

tools=tools,

messages=messages

)

# Step 6: Extract final text response

final_response = next(

(block.text for block in response.content if hasattr(block, "text")),

"No response"

)

return final_responseNow that everything is ready, let’s ask our function “answer_question” the same question than before:

Conclusion

We tried asking LLMs to perform arithmetics calculations and observed the following:

- the answers given by LLMs may be correct… or incorrect!

- in all situations, LLMs gave an answer in a confident manner, with no warning about the risk the answer might be incorrect

- if LLMs make mistakes that a simple calculator would not make, it’s because LLMs do not react like calculators, and handle numbers not as numbers but as tokens in sequences, thus losing their structures

- when equipped with appropriate tools, LLMs can understand the context and call those tools to provide correct answers

But hey! When doing arithmetic, let’s keep things simple and use a calculator instead of an LLM! :)